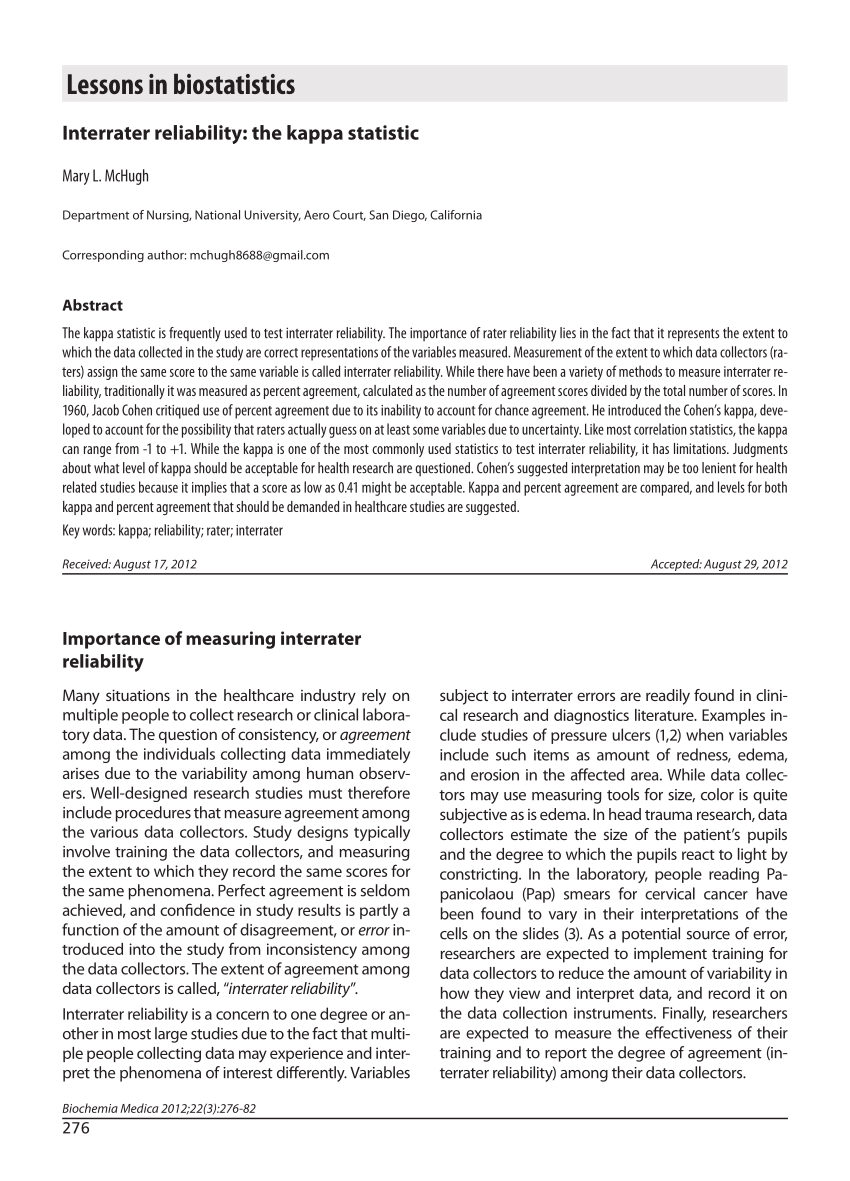

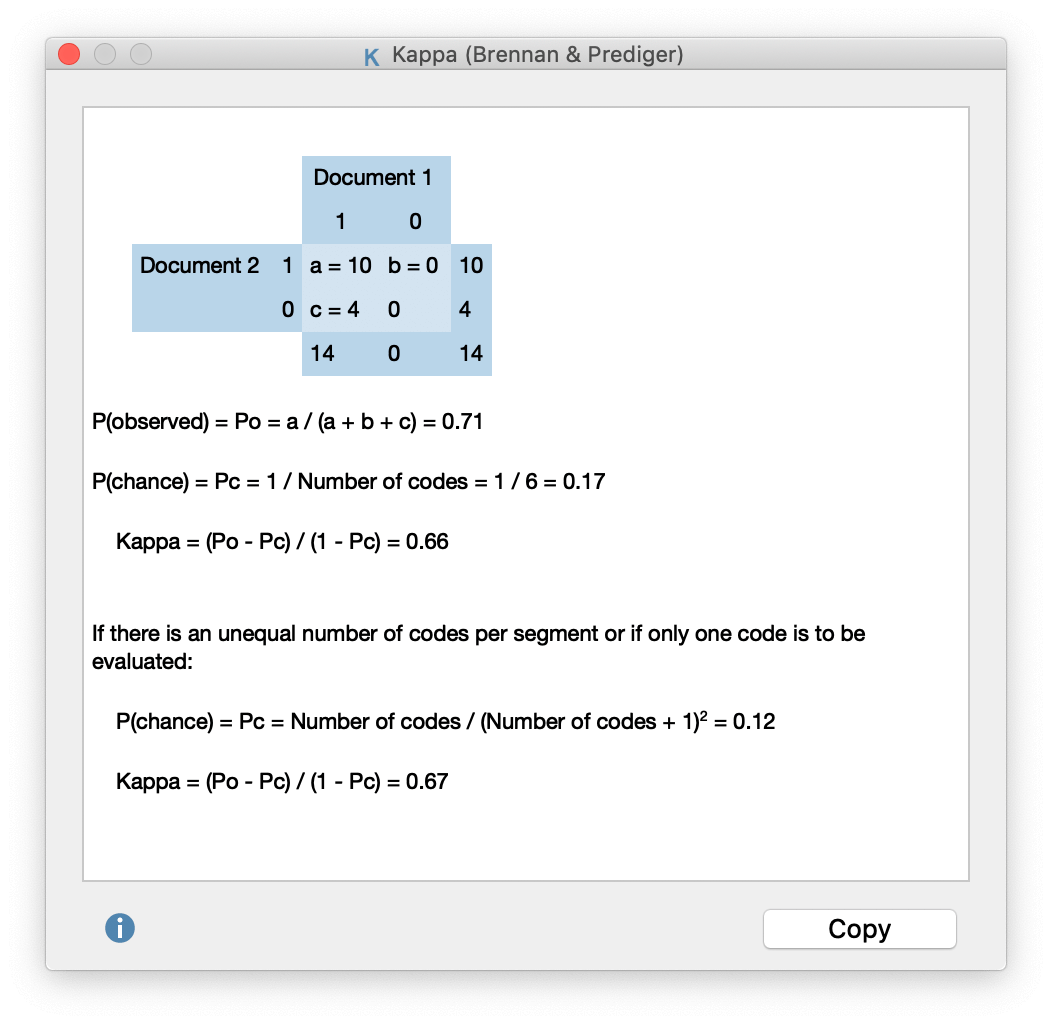

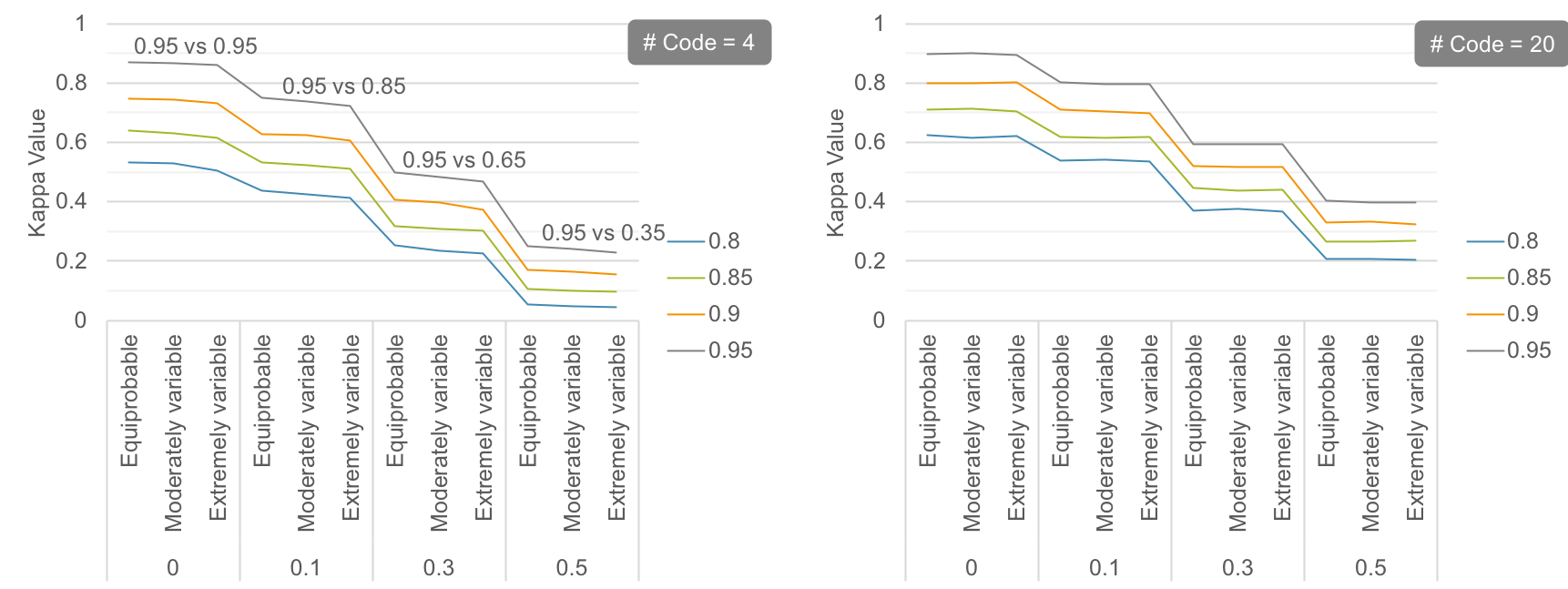

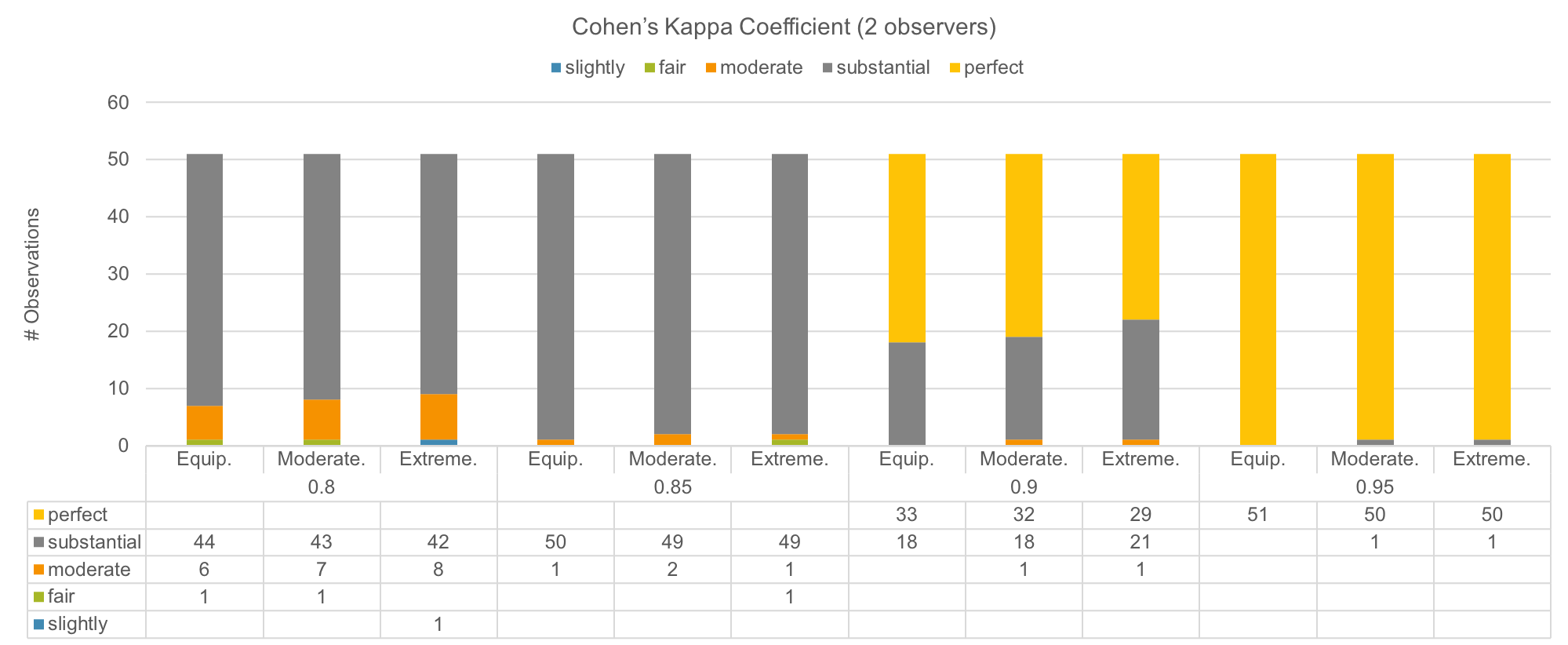

Interpretation of Kappa Values. The kappa statistic is frequently used… | by Yingting Sherry Chen | Towards Data Science

Interpretation of Kappa Values. The kappa statistic is frequently used… | by Yingting Sherry Chen | Towards Data Science

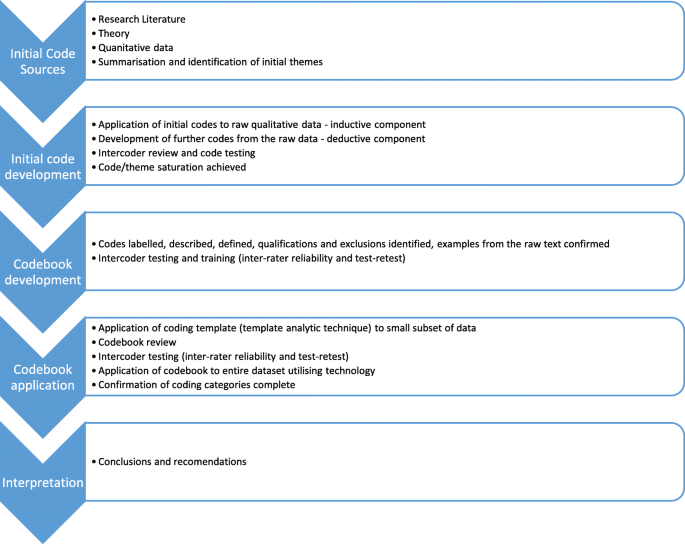

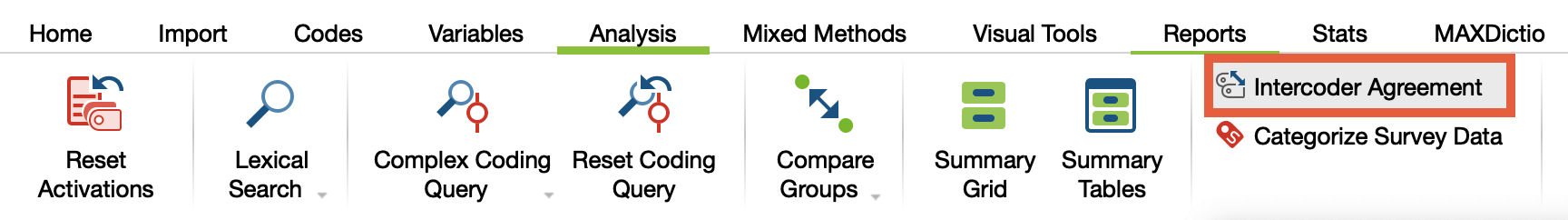

Challenges and opportunities in coding the commons: problems, procedures, and potential solutions in large-N comparative case studies

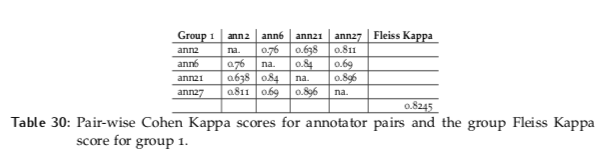

Inter-Annotator Agreement (IAA). Pair-wise Cohen kappa and group Fleiss'… | by Louis de Bruijn | Towards Data Science